Monolake: Ghosts in the Machine

On the heels of the release of Ghosts, his latest album as Monolake, we spoke to Robert Henke about his recording process, unique approaches to instruments, designing virtual space and more.

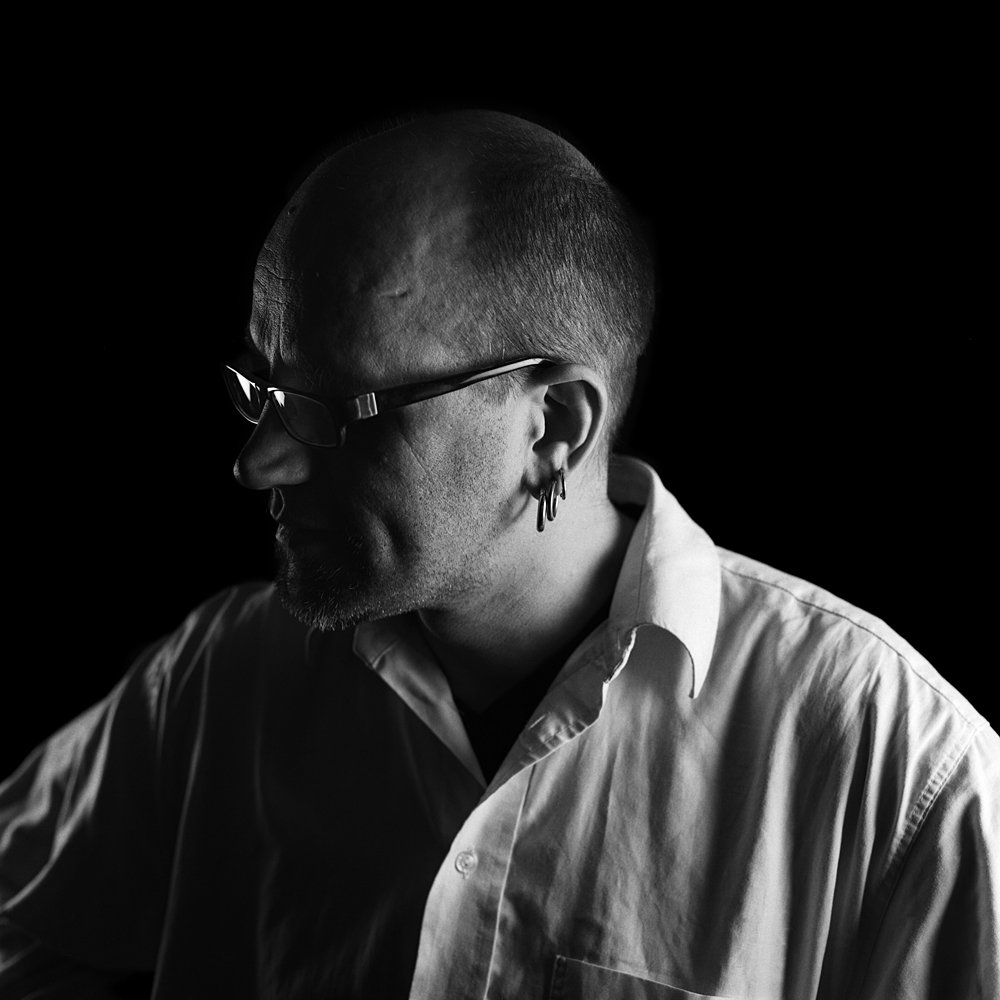

Monolake

Could you talk a bit about how you approached sound quality in making this album? How did it affect the composition process, and how has it affected the final results?

I find the term 'sound quality' a bit questionable; at least, it has to be defined before any discussion about it makes sense. In music, the results are always subjective and distortion and artifacts can very often not be separated from 'the signal'. Is a distorted bassline a technical problem or is it intentional? When we are talking about my music, we can simply omit the term 'quality' here and just talk about 'sound'.

I started to explore higher sampling rates mainly because I was curious to figure out if anything interesting goes on beyond 20kHz and if this will become audible when transposed down a lot. I have a pair of quite nice microphones that can capture signals way above 20kHz, and I started recording all kinds of sound sources at a 96kHz sampling rate with them. Then I played back the results with Sampler in Live and transposed the files down two octaves. The highest possible signals at 96kHz sample rate are around 40kHz. Transposing them down two octaves places them at 10Khz, so the 'hidden octave' is then exposed between 5 and 10 kHz. When transposing down a sample recorded at 44.1kHz sample rate by the same amount, the result is either the typical downsampling artifacts when the interpolation is set to 'Normal' or 'No' in Sampler, or it becomes muddy when the interpolation is 'Good'. With the 96kHz recordings there is simply more spectral 'headroom' and more 'air'. Transposing the material down does not sound artificial anymore. However, I did not come across really exciting 'hidden signals' so far. It is a bit like taking photos in RAW instead of JPEG. In most situations it doesn’t matter, but sometimes - or for some kind of processing - the difference can be stunning.

I very often resample my own material in order to create more complex textures. For the resampling process, the same rules apply for the recording, so I decided to produce the new album entirely in 96kHz. This became possible since my current computer turned out to be fast enough to handle my projects at this sample rate.

Producing with variable sample rates in Live can be a bit of a challenge, and requires some discipline. Live plays back files of any sample rate at any given current sample rate of the soundcard. It’s easy to work on a project in the wrong sample rate without noticing. Monitoring the current sample rate of the sound card and having a close look at the render settings becomes a mandatory task. I am personally not concerned by artifacts created due to sample rate conversions - I simply do not hear them in 99% of all cases and I do not care if they show up in the spectrum in a laboratory setup. But what does make a huge difference is how instruments and effects sound. Almost all of my drums are created with Operator, and an essential part of my usage of FM synthesis involves deliberate usage of aliasing and truncation errors. All the hi-hat presets I created in Operator at 44.1 Khz sound completely different at any other sample rate. I made it a habit to indicate the sample rate in the preset name now: 'Metal_Hihat_03_sr44', 'Zoink_sr96'. The difference in sound is also obvious for all sorts of bit-crushing and sample rate reduction plug-ins, and for many other distortion algorithms. In some cases I simply played back a specific track at 44.1 kHz to preserve the sound, then froze that track and changed the sample rate to 96kHz. This is one of the things I really like with Live: there is a solution for almost every problem, though it is not very obvious sometimes.

You told me that you initially had a different idea about how to mix and master the album and then changed your mind. Can you elaborate on this?

Well, I like to work at home. I once had a semi-pro studio room, but the space got sold at some point and I had to move out. Since that time I’ve worked in a small room with good acoustics, but it’s not by any means a real studio space. When working on Ghosts I had the idea in mind to only do a rough mix at home and later do the final mixdown in a 'real' studio, preferably also with an external engineer with a pair of fresh ears. It happened that I visited a friend of mine, Daniel Dettwiler, in Basel, who runs a mastering and recording studio. He had these amazing speakers made by small Swiss company, Strauss, and I was completely blown away. They not only had the very big ones that would neither fit in my room nor in my budget, but also small near field monitors. One day I got the chance to try out these small monitors in my own studio. After that listening session I had to buy them immediately, and after some weeks of working with them I got so confident in my mixing that I decided to skip the external mixdown and only leave my home studio for the mastering, which then happened with Daniel in Basel. The whole production was done inside Live, and we played back the sets from my laptop in Basel and transferred the stereo mix to Pro Tools for the mastering, staying in 96kHz/24bit during the whole process.

Monolake workplace

Robert Henke’s home studio. Monitors: Strauss SE-NF3 and Genelec 8020A.

I noticed you also released the album in 24-bit/96kHz for download. I can understand why it makes sense to stay high-res during the production, but do you believe that this sounds better than 44.1 kHz on a CD?

Human hearing stops at 20kHz or even much lower. I am absolutely convinced that we do not hear anything above that boundary. However, I did extensive listening tests with Daniel, switching back and forth between the two versions, and we are both highly confident that we can hear a difference - especially in the perception of space. I have a theory here, but I did not find the time so far to talk with more knowledgeable folks about it: I simply believe that we are able to notice phase differences in the range of a single sample at 96Khz as soon as more than one speaker is involved.

You mixed Ghosts in Live, with no outboard equipment. This was different from your initial plan to mix in a studio?

Yes, indeed. And it was quite a decision to make. I can see very valid points both for producing and mixing entirely in Live (or any other DAW) and for using an external mixing desk or outboard gear. It seems there is a lot of confusion about the benefits and problems of both variants. I almost feel obliged to comment on this, given the fact that I spent so much time on the Ableton forum, arguing about the 'sound quality' of Live. More or less, finalizing a production in Live without external mixing is first of all extremely cost-efficient, and this is a huge factor to take into account. A good DA [digital-to-analog] converter with 16 or more channels, plus a good analog console and other outboard equipment, is very expensive. Renting a studio with such equipment is also expensive, and maybe the money is better spent on something like a pair of good speakers, or a faster computer - or music lessons.

The second aspect is space and work ergonomics. In a small bedroom studio there is usually not enough room for a big mixer. Either the laptop is in the perfect listening spot or the mixer, but not both. Producing or mixing without sitting in the 'sweet spot' makes no sense. But even if all this can be arranged, there is still one other aspect and this is the really important one for me: I want to be able to archive the entire project with the mix easily, and I want to be able to work on different projects at the same time. This is almost impossible when including outboard effects and mixing, unless you have a bunch of studio assistants jumping around while you have a coffee break. During the creation of the Ghosts album I often switched back and forth between the different songs, and this is one of the main benefits when working entirely in one software.

Now, after all that praise for mixing in the box, there must be a different perspective, too, and here it comes: In retrospect I do believe that mixing the album with an external mixing board and with a mixing engineer with fresh ears would have been a benefit. Not because of the sound quality in a technical sense, but for the work routine this implies: It is easy to lose focus when mixing inside a DAW. After listening to the same mix 5000 times, the creator of the music might miss things which are obvious to an external person doing the mixdown.

Here is an example: I managed to have one MIDI track playing in one of my songs with the track mixer set to -40 dB. I don't know anymore when or how this happened, I just did not notice it when finalizing the track. It only came to my attention later, when I created stems in order to turn my album into material I could play live. That track would have been a quite lovely addition to my drums and it slipped through. With the routine of laying out the whole set onto an external board, labeling the faders, and making oneself familiar with what goes on in each channel, this would never happen. And also there is something to the initial haptic access to EQs. My current perspective on the topic would probably be this one: I'll do it all in the box again, pretending to produce a final product, and then afterwards go to a studio and re-do the mix there. If it fails, I still have the all-in-Live version; if it turns out to be great, even better.

The one task I try not to do by myself under any circumstances is mastering. I simply need an external person to judge the sonic balance and to help me in making the right final decisions. I am always present in mastering sessions, and very often it comes down to a dialog between the mastering engineer and me, and I finally say, 'hey, we need to get rid of that mid rangy thing here,' or something like this. But it is important that the external and experienced person next to me is able to correct my vision when I am running in the wrong direction: 'No, I would not boost this band here more!'

There's a great sense of space on Ghosts – the parts tend to breathe but also become appropriately overwhelming at points. How did you approach getting these dynamics?

Space, especially in electronic music, is a very interesting topic. If you hear any acoustic instrument, the space is already there: different frequencies radiate with different intensities in various directions, and are reflected back from the room. There is a lot going on. In electronic music, none of this happens by itself - it all needs to be constructed. We are all space designers, sonic architects of our own worlds! I love to play with reverb, with dynamics; I love to listen to the decay phase of sounds and to get a feeling of things unfolding between my two speakers. There is no specific approach, I just listen and focus my attention on these aspects of my music. This is also why I am completely fine with what the Reverb in Live offers: not because it sounds 'good', or 'realistic', but because I know how to treat it in order to get the sonic palette I want. And, if I want something else, there are plenty of alternatives; other reverb plug-ins, convolution reverb, or even feeding signals to the outside world (which in my case is a small analog mixer), and a bunch of hardware reverbs. But it is actually quite rare that I use the hardware these days unless I want to heat up the room.

There's some impressive sound design on this album. Which Ableton Instruments were you using?

I very often start my tracks by throwing in an instance of Operator and creating a bass drum or bass line sound, starting from the default preset. I have a strong aversion against preset 'content', and the first thing I do when I get a new synthesizer is to get rid of the factory presets. I want to explore the machines by myself. Only at a later stage do I flip through the presets, to see if I overlooked something essential. Then, I go back to only using my own presets. I can afford that style of working since no one is sitting behind me and telling me that he wants that hip sound from artist X on his commercial. For me, creating sounds is a huge part of what I enjoy when working with electronics: being able to define my own timbres.

Operator is my workhorse. It's quite funny, because my original goal when designing it in 2004 as part of Live 4 was to create a nice 'entry level' instrument that covers the more basic FM stuff in the most effective way. At that time, I was working a lot with the 'big' hardware FM synths in my studio, and I wanted to make FM easy to use for Live users. But Operator grew more and more and we added a lot of nice details to it. In 2012 I barely touch hardware FM machines anymore, and use Operator for 70% of all my sounds; drums, pads, bass lines, etc...

Monolake Synths

A selection of Henke’s synths: PPG Wave 2.3 and NED Synclavier II (left); Sequential Prophet VS, Oberheim Xpander, Yamaha TG-77 and DX27 (right).

Other instruments come into the game when i want to add 'the specific extra'. There is already a nice groove going on, and I reach a stage where I start exploring my studio; playing with one of my 80s digital hardware monsters, or with other software instruments. What I am looking for at this stage is a very specific sound, something that has an iconic quality. This sound can be a recording, or a synthesizer, or an effect.

On Ghosts, notable contributions came from Tension, Corpus, Live’s warp engine, a Prophet VS hardware synthesizer, and a lot of recordings of metal objects. Tension is a highly problematic beast, and an instrument with a strong personality. I do not expect it to sound like a real plucked guitar or a violin, so I cannot be disappointed. I see it as what it is: a complex algorithm that tries to simulate some of the math that occurs when playing string instruments. I understand enough to know approximately what goes on, and the rest is experimenting with the settings until something happens that sounds cool.

In my track, 'Phenomenon', these plucked string - bouncing ball - ‘miouing’ sounds that carry it come from several instances of Tension. A huge part of the sound design is using the Multiband Dynamics device to bring out all the errors of the model. The ‘miouuuouuu’ part of the sound is the result of modulating some parameters with a Max for Live device. The sound itself is very low level, but the massive compression makes it 'the signature': the error becomes the theme. Modulation or the deliberate absence of it is also a key to my music. On the track, 'Discontinuity', the signature sound is a short impulse driving the Corpus device; I toggled the LFO that modulates the pitch. What makes the track is the contrast between the modulated versus static sound I achieve this way.

For me, Live is more a big modular synthesizer than a classic DAW. The ability to route audio internally, the ability to integrate Max, and also the warp engine is what makes it fun - the quality of Live as an 'instrument' is what I enjoy. I'd like to explicitly mention the warping here, since this is again a technology where the musically interesting results come in large parts from using it against its primary intention, or at least making its specific artifacts a part of the game. I love to resample textural sounds and then apply pitch shifting and time stretching as a way of sonic transformation; playing with different warp modes and their settings, resample the result, filtering, resampling again, adding reverb, reversing, warping again, and so on. The possibilities are endless. I never use warping to actually match tempo. Audio files always sound horrible after time-stretching. You simply cannot stretch time, it is not possible without artifacts no matter what algorithm. If I slow down my speech on a computer by 20%, I sound like I’m on Valium and not like a thoughtful thinker - that's just how it is.

You designed the Monolake Granulator, an excellent Max for Live Instrument for granular synthesis. Could you point to a couple instances on Ghosts where it appears?

Several pad sounds are the result of using the Granulator. A lot of it can be heard on 'Unstable Matter'. The organ-like sound and the choir voices on 'Aligning the Daemon' are granular based.

You perform live using a surround-sound setup. How do you set this up with Live?

In the past, my sets for my live shows were slowly evolving and mutating creatures. At every soundcheck or idle time in a hotel room, I added or changed stuff. The sets became quite messy as a result, and only a few formal restrictions - like the number of channels - kept the chaos in control. For the 'Ghosts in Surround' tour I felt like doing it very differently right from the start, creating a live show that is tightly connected to the album and then is simply performed without re-inventing the wheel each night.

I started by defining an order in which I wanted to perform the tracks in a live situation, so I first had the general structure of the show in mind, before going into the details. Then, I made screenshots of the arrangements of all the tracks from the album and stared at them for a long time. I wanted to get an idea for three things: first, how are the tracks organized in terms of instrumentation? Second, where are similarities and differences? How is each track structured - where are breaks, loops, evolving parts? And third, how could I see these tracks being presented in a multi-channel surround setup? I wrote a lot of stuff on paper before actually working in Live. Then I defined a few general cornerstones: How many tracks will my Live set have (12)? What is the primary function of each track? ('Bassdrum', 'Bassline', 'Chords', 'Snare 1', ...)? What elements do I want to pan around in surround and which elements I want to be static or on all channels (e.g.: Bassdrum is static and comes out on all channels all the time)?

Then, I opened all my individual songs again in Live and exported stems. As a next step, I rebuilt the original songs using the stems, just to check if the magic was still there. I made quite some simplifications here already, in order to be able to perform the material more easily: I removed a lot of the effects before rendering the stems, so that there was room for adding effects more spontaneously in a live situation. Once this was done, I experimented with cutting the stems in individual clips and with re-arranging them in the Session View. The result was one long set with all the songs from the album as a long list of clips. I can navigate through them from top to bottom or jump around if I feel more adventurous. I can add effects on sends, change the mix, skip parts or play them longer. This all was a lot of work - it took me four weeks - but the result is worth it.

At this stage, I also added an additional MIDI track that sends out cue signals via Max for Live and OSC to the video rendering software run by Tarik Barri, my visual counterpart on the Ghosts Tour.

Afterwards, I decided what kind of hardware controllers to use. I ended up with two units, each with 16 MIDI faders [Doepfer Pocket Fader], and an iPad running the Lemur App, providing some 30 more faders and a lot of buttons. I have plans for extending it with a second iPad for even more control.

As a result of the ability to control so many parameters, I also added a MIDI track to replace some of the material in the stems by MIDI clips plus synthesis, so that I can play with the synthesis parameters in real time. Mainly I play the sound of the bass lines live. Wobble is possible now. Just in case...

The last (and not entirely finished) part was the surround control. I needed to come up with a very flexible and robust scheme here, since the venues are very different and I have to be able to deal with numerous speaker setups. My setup is what I would call 4.2.2. This is four main channels (two in the front, two in the back) and two more channels that can be placed wherever they fit (to form a ring of six speakers, or to have additional speakers in the center of the stage, or from the ceiling), and two channels for the subs.

I use sends in Live to feed the different channels, and a Max for Live patch that allows me to dynamically control the sends. I defined a bunch of 'panning tracks' and I have a M4L device in each of these tracks that takes over the control of the sends. These devices are all controlled from one 'SurroundMaster' device in the master track, which provides all kinds of synced or free-running movements of the sounds on the panning channels. Currently I have circles, spirals, jumping movements, and static routings, but there is still a lot to improve. There is quite some spatial movement possible, but I am still learning how it all can make sense in a club or concert context. The instrument is ready, now I need to practice and learn: I want the spatial aspect to be as composed as the rest and not just a random effect, but I am not there yet.

—

Find out more about Monolake