Jonathan Zorn: The Uncanny Vocoder

Jonathan Zorn in his studio - photo by Ross McDermott.

There’s a history of artists adopting and amplifying what are initially perceived to be imperfections. Just look at the number of plug-in effects today that replicate the distortion characteristics of tube circuits, vinyl, or tape, or see how many modern productions introduce sampled crackle and tape hiss.

It’s in this tradition that electro-acoustic musician and academic Jonathan Zorn created his series of pieces, And Perforation, based on text from Sigmund Freud run through multiple generations of translation in Google Translate - over time, the imperfections of the system take over in the direction of the recited words.

And Perforation by Jonathan Zorn

With the release of And Perforation and its cousin-piece, Language as Dust, we spoke to Jonathan about working with vocoders and modular synths, dissecting language, and making a reactive performance setup with Live.

Can you walk through the process of assembling the texts for Language is Dust and And Perforation?

And Perforation - A friend lent me a Doepfer modular vocoder and it seemed a perfect opportunity to make a series of compositions engaging with Sigmund Freud’s essay The Uncanny. The idea that something can be both familiar and unfamiliar at the same time is so apt for encapsulating my fascination with synthesized voices. I first came across this connection in Mladen Dolar’s book A Voice and Nothing More.

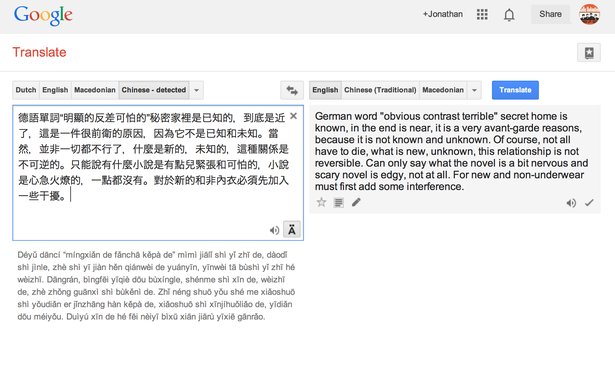

To create the text, I first used Google to translate the original German text into English. In “Obvious Difference Scary”, a short passage is iteratively translated into several languages and then back into English. You can hear the literal translation of “das unheimliche” become increasingly distorted. In “The Bizarre”, a longer passage of the text is treated with Microsoft Word auto-summarize and further Google translations. In “And Perforation” I exercised more editorial agency in shaping the linguistic mush created by all of the automated translations.

Processing Freud through Google Translate.

The text for Language as Dust draws includes Aristotle’s De Anima, Julia Kristeva’s writings on semiotic theory, Thomas Edison’s notes on the anticipated use of recording technology, misheard vocoder communications collected in Dave Tompkins book on the history of the vocoder, and Electronic Voice Phenomena (Konstantin Raudive’s transcriptions of his communication with the dead through radio static).

Some of the text manipulation techniques include MS Word auto-summarize, splicing multiple texts together (Burroughs-style cut-up), erasure, and translation. Through this process I became interested in using language as musical material. In “Meditation on Pattern and Noise”, for example, I repeat phrases and words to create patterns of sounds, creating a musical logic independent of semantic meaning.

Jonathan Zorn - Language as Dust

How did you create these pieces in the studio? Can you talk about using the Vocoder?

The process started out with diagrams that I used to determine durations, vocal effects, pitch sets, and texts. I then recorded the text and instruments, which include violin, double bass, piano, harp, modular synth, DSI PolyEvolver, and a 1930s tube radio tuned to the “foreign band”. Finally, I manipulated my vocal recordings using SuperCollider, Max/MSP, Ableton Live, and the speech research software Praat.

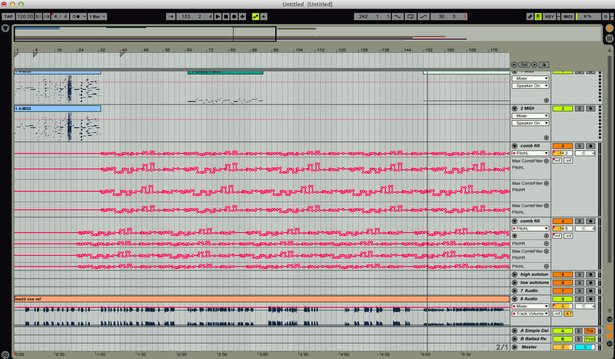

Automation in Looper from the creation of And Perforation.

The pieces in And Perforation were designed with live performance in mind, so I restricted myself to working with Live and the Doepfer modular vocoder. I ended up composing these pieces in Live because it made processing and routing audio to and from the vocoder very easy. Vocoders do best with consistent dynamics so it was important to be able to add compression and EQ before and after the vocoder. Using Live also made it easy to quickly route different sound sources to the inputs of the vocoder, which was helpful both in developing the sounds and in performance. When I had to give the Doepfer vocoder back to its rightful owner I was able to quickly recreate the pieces using Live’s vocoder.

How did you go about preparing the Language is Dust pieces for live performance??

The performance of these pieces is in some ways deceptively simple. I have most of the automation setup such that I press the space-bar and start talking. However, the timing of the text is quite precise and requires a great deal of attention to deliver it in a relaxed manner. In these pieces I attempt to disappear… however I have yet to completely escape the peculiarities of my own speech cadence.

With all of these pieces the initial mic input runs through a simple processing chain of compression, EQ, and a gate before hitting the vocoder or other effects.

Since Language as Dust was conceived as a studio project I’ve used Live and M4L to reverse engineer the piece and create a performance version. So far I’ve only completed performance versions of the second and third sections.

Automated Comb Filters from “Meditation on Pattern and Noise"

For “Meditation on Pattern and Noise”, I use Autotune, Live, and Max for Live for comb filters and delays. Since I ended up using a sampled harp instrument I was able to have some fun with the midi data. I used the M4L MIDI granulator followed by a Rack of Scale devices to create a range of chaotic MIDI events for the harp and Autotune.

In “Meditation on Presence and Absence”, the three instrument tracks and three synth tracks are pre-recorded. Each instrument track feeds two vocoder tracks, one in which my speaking voice is modulated by the instrument and the other in which the instrument is modulated by my voice. In the live version there are four vocoder tracks plus an instance of Sonic Charge’s Bitspeak to recreate the LPC vocal sound live. I also use a Midifighter 3D to play a library of synthesized phonemes. With both of these pieces all the modulations of the effects and the changing balance of the mix are controlled with a great deal of automation, sticking fairly close to the studio version.

As an electro-acoustic musician, how do you find the interplay between experimental computer music and playing more traditional instruments?

As a composer my process begins with a combination of conceptual clarification and sonic play. I come to electronic music as both a performer and a composer. I play the double bass, and the physicality of the instrument informs my work with electronic sounds. I learned how to work with electronic instruments and acoustic instruments at roughly the same time, and as a result, I don’t view my compositional work with electronics and acoustic instruments as fundamentally different. There are of course different limitations that one has to be aware of, but composers are always in dialogue with the constraints of sound production, whether working with a human performer with a physical instrument or a computer running synthesis software.

Jonathan Zorn piece for double bass and computer.

The instrumental writing in Language as Dust doesn’t allow for any improvisation and there is no spectral analysis on the instruments to create data for the computer to react to. What happens instead is that through the use of the vocoder and LPC processes, both my speaking voice and the instruments undergo various degrees of transformation.

Ultimately I am most interested in the interplay of sound and concepts—a kind of thinking through sound. I’ve found that computer technology enables me to do that in a way that makes sense to me. I foreground the sound and concept, not the technology used in the creation of the music. However many of the conceptual conceits that I develop in my work are informed by my fascination with the history of sound technology and our human relation to these predominantly electronic inventions. There is something poignant to me about the interplay of acoustic and electronic sounds, particularly the combination of human voice and electronic sound, that seems to speak to the anxiety of our present moment.