Junichi Oguro: 3D Sounds and Producing with Visuals

In recent years the use of multi-channel surround sound systems has become ever more wide-spread. From standardized home theater systems with 5.1 channels to the much larger scales of custom-built surround sound solutions for planetariums, museums and music venues, this expansion is showing no signs of slowing down. Raising expectations for the development in this exciting field is the 3D sound system, a sonic environment of coming years based on a multitude of speakers.

Not only does the 3D sound system replicate existing sonic environments, it also provides surreal musical experiences in an immersive space created with visuals, showing its enormous potential to push the boundary of the framework of auditory expression. But what exactly is the 3D sound system? What does it have to offer when creating music over it and working with a cascade of visual information? How do its approaches to production diverge from the traditional stereo music-making?

To know the answers to those questions, we reached out to composer/sound architect/producer Junichi Oguro. He has worked on a variety of projects that range from designing an audio space for a planetarium that features the 43.4 channel Sound Dome 3D sound system by Germany's ZKM to composing for adidas in its 2008 Beijing Olympics project. The Sapporo-based Ableton certified trainer currently gives lectures at a university while getting involved in many other projects including his new ambient single which is set to release on his own 43d label.

As shown in the latest One Thing episode, the multi practitioner seeks inspiration from videos, which he also touches upon in this interview where he discusses the application of visual information as graphic notation, his shift in interpreting sounds and potential listening experiences to be seen.

You worked as a sound architect to install Japan's first 43.4 channel 3D sound system in a planetarium. How did you come to know the sound system in the first place?

Since 2000, I've been producing four and eight channel field recording pieces for personal projects. Harmonia, which is a sound art installation I created in the early 2010s, featured four super directional speakers. I had heard of ZKM's Sound Dome back then but it wasn’t until about 10 years ago that I experienced it firsthand. At that time, I was in Belfast, Northern Ireland, where I reviewed and researched into the technologies that would be required in Japan for the wide use of the 3D sound system. There was a 3D sound system called Sonic Laboratory at the university's research institution, SARC, and it was using what would be the basis of the current 43.4 channel sound system. In 2017, when Konika Minolta decided to install a new sound system at their planetarium in Tokyo, I thought the 43.4 channel Sound Dome system would work well with visuals and would create the content of the next generation in the dome of the planetarium. Then I worked at ZKM on a project to introduce it in Japan and was assigned to be a sound architect for the planetarium. I recently directed a program (Japanese) and composed music with the 43.4 channel 3D sound system for the venue.

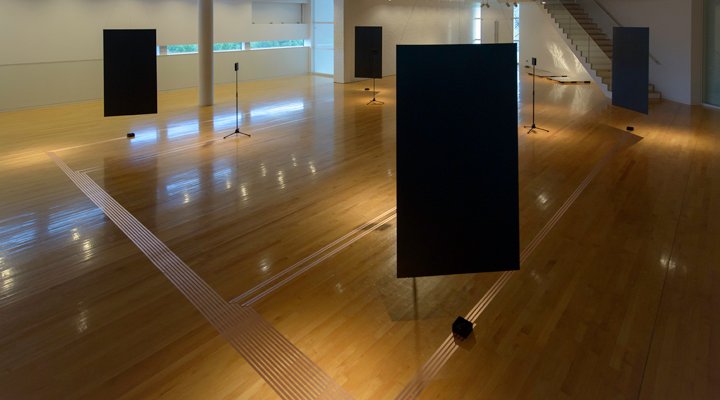

Harmonia: Junichi Oguro's 2012 sound installation

Could you tell me how the 43.4 channel sound system works?

It's comprised of hardware that is an architectural acoustic design like speakers, and software that enables you to play sounds over the 43.4 channels. Both are required. With the system at the planetarium, it is possible to replicate an acoustic space both by Vector Base Amplitude Panning and Ambisonics, which is an immersive 3D audio format. This VBAP uses a triangle of three speakers that creates 3D sounds through its composition of vectors. Placing speakers in several triangles in a way that optimally surrounds the dome requires 43 seperate units. Putting a speaker at the top of the dome as a starting point, 14 layers of speaker triangles are evenly laid out along the contours of the dome.

How did you learn the techniques and cultivate your understanding in this field?

Multi-channel production entails preparing hardware tools as well as designing necessary software yourself. In my case, I often created applications for multi-channel audio with Max/MSP and used them for my productions. This field has been led by acoustic research institutes in Europe such as IRCAM and ZKM, and I studied the usage of 3D sound system through the documentation of projects carried out at those places. In the actual installation stage, you work together as a team with experts from various fields which you need to traverse in order to connect the dots between their techniques. I learned the newest expertise from them.

What's important about the 3D sound system is that there's no point only having hardware. It's crucial to consider what kind of musical piece and content you can make with this system. I worked for an acoustic measurement device company in Japan where my tasks dealt with architectural acoustics like sonic echo and impulse and anechoic chambers. This is how I deepened my understanding in a complex manner – by approaching both the musical side as a composer and the acoustic side as a sound architect.

What influence has working in the 3D sound system and multi-channel environments had on your music-making?

I’ve become more conscious of the whole tonality. I consider stereo imaging as the basis of multi channels. It's important to me that the creation can also exist in stereo before thinking about its movements or textures in the 3D space. The multi-channels have a character similar to precision machines, and I think it's pretty much down to the creator's imagination when it comes to how they make the space sound. In my case, I'm always conscious of the sounds of an existing space in everyday life or field recording and make use of that experience for my production. I think my listening has become more of a conscious act than before.

In the case of stereo, reverb and delay are often used to express the depth. How do you replicate depth with a 3D sound system? Is there any technique that's characteristic to the system?

With a 3D sound system, sounds are treated as objects in a space. Unlike the planar depth expression with the two channels, the locations of these objects represent those of the sounds themselves. For instance, you can express the depth by setting the location at the rear side in the whole 3D space. It's also possible to express a depth with reverb and delay like one does with two channels. I feel the importance of the whole tonality remains the same whether it’s stereo or 3D sound system.

The best part of the 3D sound system is its capability of reframing the existing rules of listening considerably. Traditionally, the L and R channels have been fixed wherever the listening point moves, but the 3D sound system can freely define the front, rear, left, right, top and bottom and be used to create a cosmic space as well. I do field recording too and use the system to replicate the sonic environment that I hear in a jungle or create and present a completely different listening space. It's this new listening experience that will lead to new forms of sonic expression. Compared with the sound in a virtual reality space, the 3D sound system has an enormous expression capability to replicate space. For a sound system of a 3D visual and immersive space era to come, it also has great potential for visual creations as well as entertainment content like theatrical pieces.

On the other hand, I think binaural recording is a very interesting 3D sound technique for two channels. It has its limitations and you have to listen with headphones, but recording spaces with binaural mics will be applied to more fields in the future.

What is your workflow like when you produce 3D sound pieces?

I usually compose music with a DAW like Ableton Live first and then create a digital 3D space and the movement of all sounds with dedicated software for that purpose. The challenge is that you already need to have a certain image of the movement at the composition stage. Music expresses the progress of sound over the timeline. The 3D space adds to it another parameter that represents the location of sound, making the breadth of expression much wider. And it also makes the production time longer. It is yet expedient with the currently available DAWs to exchange as many tracks as 100 or 300 channels for the 3D sound system between the platforms and export them individually. So I explore my own ways, building a setup of dedicated hardware to transfer the data between MADIs (Multichannel Audio Digital Interface).

In your One Thing episode, you show a method of making music by syncing it with visuals. It is very characteristic of you as you have produced music for TV ads that fits their visuals.

This method developed out of my experience composing music for adidas' project for the 2008 Beijing Olympics. That time, I started the composition with only a storyboard for reference. Things moved forward as the actual 3D computer graphics were sent over and I made the necessary adjustments to the music in order to reflect the movie. The music developed as a stunning video came to completion towards the end of the project, and that made the process particularly memorable. It has since become a fundamental part of my music making. With the current version of Live, you can process movie files as video clips on the timeline and cut them just like audio clips. This is a crucial feature when making music from visual pieces. The ability to treat them freely on the timeline provides extra flexibility to music making. What I've developed from this style that puts videos on the timeline and uses them as a kind of graphic notation is what I show in One Thing.

Is there a technique or a particular device you find especially useful when you compose in this visually-oriented way?

This is a movie editing technique, but I often use a video editor called DaVinci Resolve by Blackmagic to cut up visual sources and adjust their colouring. I'm using the paid version but the free version is also capable enough to make a variety of edits. It allows movie editing in a music-making fashion like the way you do arrangements with Live. I have a strong feeling that music makers will start creating audio-visual pieces down the line like this.

In your One Thing episode you are using high-quality film equipment. But if you come across an unexpected filming opportunity in town and you don't have the stuff with you, do you use a smartphone to capture the scene?

I normally carry around a portable recorder for field recording and a SLR camera that has a filming capability, but when I don't I film it with my smartphone. The features of smartphones are as high quality as the fairly recent previous models of cameras used in the film industry, and I pay attention to the angle of view and the resolution when filming. In the university course I run, students shoot videos with their smartphones and make music like I did in One Thing. I walk through the process here (Japanese). You can download a free Live project file that includes the video clips I used in this tutorial.

Download Junichi Oguro’s free Live project file to create music with video clips

*Requires Live 10.1 Suite or later

The motions you see in a video are great helps to inspire ideas for musical melodies and rhythms in a similar way to graphic notation. The movement of people are particularly inspiring. Whether it's contemporary dance or the casual gestures of everyday life, ideas for composing are there in all the visual information. As our listening habits are radically changing, there are more and more people that consume music through YouTube and Instagram. If it becomes possible to use third party videos from media platforms like these to express your ideas in your audio-visual productions, we'll see both the methods of music making and ways of listening evolve even further.

Keep up with Junichi Oguro on his website, Facebook and Twitter