Extending Live: How Three Different Artists Approach Visuals for Live Performance

In a performance setting, visual expression can be just as vital to the show as the music itself. For performers who want full control over the audio and visual experience, Live can act as a bridge for both parts of the equation. While some users may already be familiar with the many ways to make this possible (the topic has been discussed both in past articles and interviews and in conversation at Loop), here we aim to demystify some of the technical approaches you can use to create visuals to accompany music within Live. We spoke to three audiovisual artists using Live as their performance hub, and asked them to break down a recent work intended for live performance environments.

The artists we spoke to use a range of tools and workflows, including Max for Live, third party video software, and programming DMX lights within Live. These solutions vary in technicality, and are intended to encourage users of all levels to explore the numerous possibilities of adding visuals to your performance.

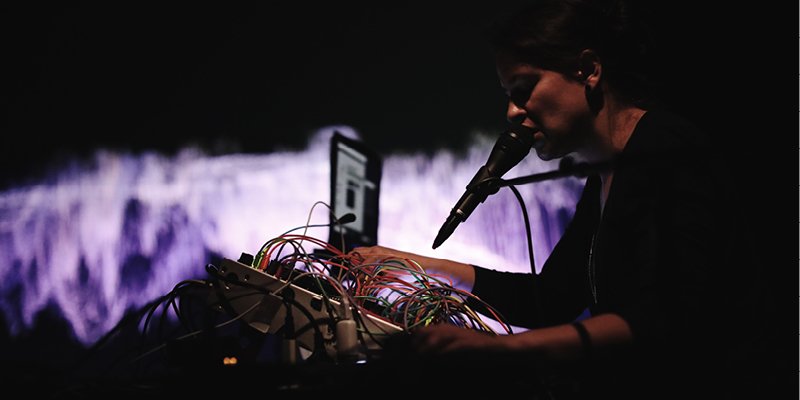

Sabina Covarrubias Viaje

Sabina Covarrubias is a composer and multimedia artist originally from Mexico City, Mexico, currently residing in Paris, France. Her audiovisual works are often classified as visual music, electroacoustic music, electronic music, symphonic, choral, algorithmic photography or live audio-reactive visuals. Her recent piece Viaje consists of 42 minutes of live electronic music and live generative visuals, made with her modular synthesizer and a vocoder, alongside visuals made with Jitter, a set of objects used to create visuals in Max/MSP.

Sabina Covarrubias – “Viaje”

Covarrubias started learning how to use Jitter during her PhD studies in Multimedia Scores for Music Compositions at the University of Paris. She became increasingly interested in creating a visual representation of sound, and was introduced to Jitter as a specialism at IRCAM (Institute for Research and Coordination in Acoustics/Music). We had the opportunity to dive into further detail about how Covarrubias used Jitter within Max for Live to create the visuals for Viaje. She also provided us with a tutorial and a free Max for Live patch to help you get started.

To begin, I am curious to hear about the inspiration behind Viaje, and how that is translated musically and visually?

“Viaje” means "travel" in English. In this case I’m referring to travel across the soul, inspired by ideas of Shamanism. My parents called me Sabina after a Shaman with the same name. I'm not a specialist, but in Shamanism, they say that “travelling” is a way to explore your own soul. The intent of the piece was to create this “travel” within myself - however, I don't like saying that in program notes, because I want each person to imagine whatever they want.

The piece is structured in sections, where each section corresponds to a part of the trip. In a way, it’s about travelling from chaos to order, and the piece is structured the same both sonically and visually. In total there are six different pictures, but all of them are unified by bridges.

How do the music and the visuals relate? Did it start with the musical ideas or visual ideas, or were you working on them both side by side?

When I'm composing, the audio very often comes with images. So when I was recording Viaje, I would first start with an audio part, but I was already imagining the visuals that would go along with it.

Wow. What kind of things are you visualizing? Shapes, colors?

Nothing so specific, but for example, there's a part that sounds to me like a lake with frogs, so I was already visualizing animals and jungles, something related to nature. So for the visuals in that part, I worked with an algorithm called Boids that emulates the movements of birds - it looks like bugs or bees flying around.

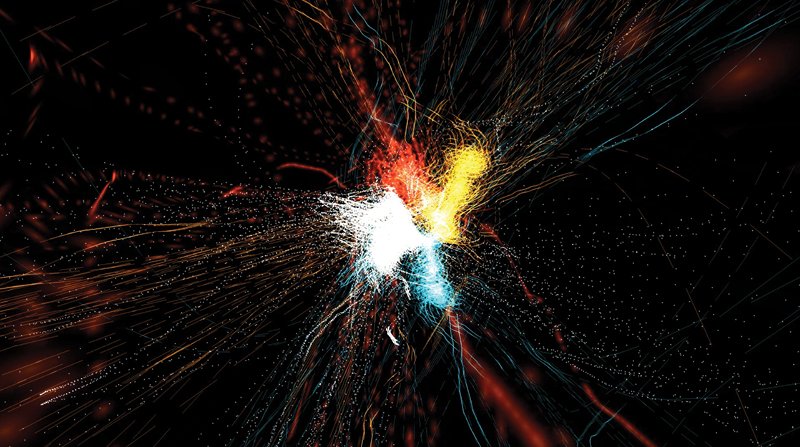

A still from the section using Boids algorithm

Did imagining visuals when you hear audio always come naturally to you, or is that something you developed over time?

I think it happens naturally, but it also has to do with my musical background and orchestration lessons. In order to understand concepts in orchestration, I needed to visualize them in my mind. Seeing the movie Fantasia as a child really helped me to develop that, because the sounds and images were so close! Soon enough, it happened naturally for me - I'd be listening to something, then begin visualizing that sound in my imagination.

Fantasia is a great example - I need to revisit it! How much of the audio and visuals in Viaje is improvised? Do you have a philosophy on improvisation when it comes to live performance?

Some parts are pre-recorded for the video and audio, but the elements that can be varied when I'm performing are the vocoder and the percussion. Similar to the architecture of a building - there are parts that will never change, because they're the structural columns of the work, but in the middle I can extend parts or make them shorter.

Can you break down your setup for the live performance of Viaje - for both the audio and visuals?

It really depends on the show. If I want the improvisation to be more flexible, I do it in Live, and if I want the audio to be more fixed, I do it with just the modular synthesizer.

For the latter case, I run the visuals on a dedicated PC - a 15-inch Alienware laptop with Nvidia GTX 1070 GPU. I have a Max/MSP patch that processes the visuals live with Max standalone (for information on Max for Live vs Max/MSP standalone, visit the Ableton Knowledge Base). For the audio, I just have a modular synthesizer - I use two Intellijel Plonk modules, a Mutable Instruments Plaits and a 2HP Kick for percussion; a Roland VP-03 for the vocoder; and a Bitbox mk2 by 1010 Music to play back the pre-recorded parts. When I work this way, the visuals and sounds are divided.

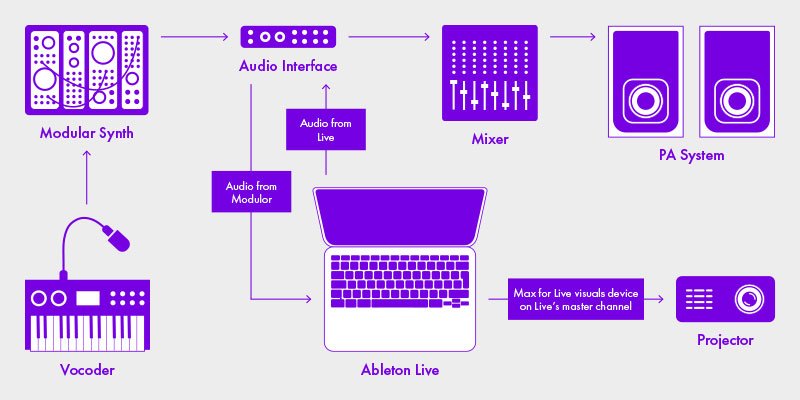

If I want the performance to be more improvised I do it in Live, because it has much more flexibility. In the more fixed setup, the sounds from the modular synthesizer are already mixed, but with Live I can leave most parts unmixed, and I can trigger them at different moments. For this setup, I have one computer running audio through Live and the modified Max patch running as a Max for Live effect to process visuals. The output of the modular synthesizer goes into an input track channel in Live, and I have a Max for Live device on the same channel to produce the images.

Routing diagram for Covarrubias' live performance setup

When you’re using Live to process audio and visuals, do you ever struggle with CPU overload issues? Do you have any suggestions to minimize CPU use, either on the Ableton side or the Max side?

This is a difficult topic, but the solution I have found is to program the Max patch as much as possible using objects called OpenGL, which instead use the computer’s GPU, leaving free space for CPU (and audio processing). This is why it’s very important for me to have a good GPU.

What do you think are the advantages or disadvantages of using Max for Live to generate visuals, as opposed to other software that’s dedicated to the visual world?

The advantage of using Max/MSP inside Live is the marriage between the potential of the two programs. Max has objects that are dedicated to performing sound analysis and MIDI analysis, so it's really easy for a user to start building interesting things right away without knowing much about programming.

You can think about Live as the brain, sending information to your synthesizers to control them, and providing these messages for the visuals via Max for Live at the same time - it all happens in the same software. You can grab any information from Live and process it with Max for Live.

I know you have a lot of experience teaching people how to make visuals with Max and Jitter. Do you have any general advice for musicians who use Ableton Live and want to get started with visuals?

I think it’s best to start by going step-by-step into the world of Max for Live and try to understand Jitter. On my website, I have compiled a list of resources on how to create Max for Live devices using Jitter and OpenGL. I would start there, then start to learn Jitter, and remember to always use globjects when you’re programming visuals to run inside of Ableton Live via Max for Live - this will save you vital CPU resources!

Jess Jacobs and Pussy Riot

Jess Jacobs is a Los Angeles-based music technologist, mix engineer, and multi-instrumentalist composer, performer and producer, blending categories as often as she can. Before all her tours were cancelled in 2020, she began collaborating with Russian punk band and feminist performance art collective Pussy Riot. Here, we get a glimpse into the many roles that Jess performs, with additional insight from Pussy Riot's Nadya Tolokonnikova, who works on the creative vision for the group. The pair told us how they worked together to create visuals for their upcoming tour.

Nadya, what is the vision for the upcoming Pussy Riot performances, and how does your vision for the onstage aesthetic reflect that?

Nadya: It’s a really visually-driven performance. The music is important as well, obviously, but to me personally and to other members of Pussy Riot, the visual side of things was always as important as the music. Sometimes we even describe it as an audiovisual performance piece, rather than a traditional music concert.

How was the visual content determined? Where is the source material for projections from?

Nadya: It depends. We don’t usually get stuff from the internet, but if we do, we ask for permission. It's mostly stuff that we’ve filmed. I direct and operate the camera. Sometimes there are other DPs, but I edit and color correct too. I work on most of our videos by myself, except for about 30% of the time, when I collaborate with visual artists from all over the globe - from Argentina to Russia, and even remote places in Siberia.

How did you communicate your aesthetic vision to Jess? What did that collaboration look like?

Nadya: It was overwhelming. I was like, “Okay we need to do this and that, and that…” and Jess just said, “Okay, this is how we’re going to do it.” I actually didn’t expect to be able to find a person who could operate video, lights, and music at the same time, because I’ve never seen anyone doing that before. Before, I would travel with one person who does visuals, and one who operates lighting, and another person who does audio, which is how it usually works, but Jess was able to combine all of those things somehow.

What was the workflow?

Nadya: I don’t know, Jess, did we have a workflow? Every performance is pretty chaotic. Sometimes we have two members, and other times we have 20. When it comes to Pussy Riot, I wouldn’t say that we have anything really stable - it’s very ad hoc. I don’t think there is such thing as a “workflow”. So I think Jess is responsible for bringing an organizational vibe into what we’re doing. That’s useful when it comes to touring, because when you’re doing one-off shows you can go crazy and create everything from scratch, but when we’re performing every day it’s more difficult.

Jess: It’s like beautiful, organized, chaos [laughs].

So, it sounds like there is a concrete link between the visuals and the music. At your concerts, do the videos you show correspond to the lyrics you’re singing or are they more abstract?

Nadya: They do [correspond]. We are creating audiovisual propaganda - propaganda for our amazing progressive values! Our ideal scenario is that a person goes to a Pussy Riot show, and when they leave, they want to go protest; they want to go and occupy a local government building! So, if we’re capable of inspiring people to do that, we know that we’re doing a good job. In a lot of ways, it relies on a lot of big, in-your-face letters, but it doesn’t have to be the literal lyrics from the song. It can just be the main ideas from the lyrics, or questions that people need to ask themselves when they listen and see the performance. So, it’s all sorts of interaction with the audience that provokes them to act.

I mean, anything that can put you in a certain mood, and in a lot of situations the mood is pretty dark, because we sing about serious political issues like police brutality, or climate destruction. But also sometimes, we like to shock people with a sudden celebratory video or song and finish on a positive note, because I don’t want people to come out of the concert in an overwhelmingly negative mood. I feel like that’s what they get when they read the news. I want to give them some dark mood, but also fill them with hope, through actions and through changing our attitudes, and help them to realize that solidarity can actually save us, and help us overcome those negative moods. That’s what this combination of music and video should create in you when you see a Pussy Riot performance.

Members of Pussy Riot. From right to left: Nadya Tolokonnikova, Bita Balogh, Rachel Fannan, Maria Baez

Jess, I know that in the last couple of years you’ve worked predominantly as a sound engineer, but your role has expanded working with Pussy Riot. Can you explain each of your roles for the upcoming Pussy Riot performances?

Jess: It’s kind of been expanding - I’ve almost always done playback as well as front of house, so that’s always been a piece of it. The newest piece is definitely the lights and the video. That was my first foray into the work of Resolume and VJ stuff. So, I was a tour manager and production manager. Production manager meaning: I take in what Nadya and the team want to do, and then order everything necessary to make that happen, whether that’s at a festival that they’ve flown to and I’m not with them, or an event that I’ll be at in person. If we need specific pieces of equipment, that’s stuff I would do. Or procuring laptops. How are we breaking things up on stage? Are we networking things? It’s kind of the bird’s-eye position.

Within that, I’m mixing front of house sound for them. I’m mixing monitors from FOH for them. Maria, the guitarist and keyboard player runs the playback, but I do all of the stem mixing and prepping the computer from the beginning. I install and configure everything.

I also program the lights in Live, and I program the video cues in Live. So I don’t know how many hats that means I’m wearing already...

Then, I also ingest all of the video material and throw that into Resolume, and take care of networking and put that whole universe together so that things are automated and predictable and hopefully foolproof.

So you do all of that behind the scenes, and then during the shows, you’re doing FOH and the guitarist is triggering the live playback [for audio and visuals]?

Jess: Yes, I set it up so it’s very easy. We just use Setlist, a Strange Electronics plugin in Live, and Live is the brain, so it just sends out MIDI notes to whoever needs to listen. Whether it’s the DMX box or Resolume, or a patch change for Nadya’s sub bass synth or something like that.

Pussy Riot member Maria Baez at tour rehearsal in Los Angeles, CA

How and why did you end up taking on the visual aspects of live performance?

Jess: It was just something that had to be done, you know? Basically, I like to approach any project with the attitude of, “I don’t want to say no.” So, if there’s a way that it can be done - if I can think of a way, or find a way via research - then it could be done.

Previously, they were running stuff using actual video clips in Live, and I was worried about the stability of the hardware at that point, because it’s a lot for one machine to be handling video and audio. Having so much experience with playback in the past, and having seen and fixed major issues, I’m critically aware. Also, as a software engineer, I’m aware of machine performance and the need to keep machines cool and low on resource consumption. So when they came to me, I was like, “This has to be split out onto a seperate video laptop that’s going to be easy to configure for all of the different places we could end up.” We were supposed to do a bunch of crazy festivals and fly dates this year, so I knew that everything needed to be really easy to set up, and I didn’t have the budget for a VJ or LD, so we just figured it out.

Can you explain what the different visual elements you have going on for the live performance are?

Jess: Totally. I’m always going for reliability and ease of reproduction of a show as much as possible. We knew that we wanted lights at certain points - strobes, at least. The rest of the light direction is pretty simple and very straightforward. There’s no front lighting and no movers really, and the point of that is to really showcase the video. As Nadya said, the video is such an important part of the performance. We knew that we wanted to have some emphasis on certain parts, so Nayda asked if I could get a couple of strobes and that’s what we started with. There were 2–4 strobes depending on the size of the stage, and those might be strobing white, or red, or blue - they’re multicolor, so it depends on the color temperature of the song. So we sat together and went through the set one day in production rehearsals, and she told me where she wanted strobes, and I programmed it in, and that was that.

So there’s lights, and then there’s video. We had a projection screen, or at festivals there’d be an LED wall. So we’d have a projector that the Resolume laptop is hooked up to, and that was the video component.

There were some other elements. There’s a lot of decoration like on this table [points to an instrument stand], and there’s some Pussy Riot signal tape. There were some stationary lights too, like a couple of sirens that they would turn on and off throughout the performance, but those aren’t automated.

There are also dancers. They always have dancers.

How is the Live Set organized in Ableton Live?

Jess: I prefer to work in Arrangement View for stuff like this, because of the nature of the group. As Nadya was saying – it’s controlled chaos. They might want to change something during a soundcheck for example, so for that reason I don’t love using Session View because it’s so variable. So, we use Arrangement View, which also lets me use Setlist (plugin). I really like using Setlist, because then if they want to change the position of a song in the Set, it’s just super simple.

So Arrangement View. Locators. Setlist picks it up. MIDI tracks, which contain envelopes for the lighting and those change as needed. When a light needs to be on, a certain envelope goes up, and when the colors need to shift, the envelopes for each color will shift.

And then the video is triggered by a MIDI note sent via RTP MIDI to the Resolume laptop, and that happens right at the beginning [of the song]. It’s not like a VJ would do, where they're playing with different textures and blending or whatever. It’s very much “this is the video for this song.” So that just fires once and goes off into the ether.

How do you like controlling both the audio and visuals of the show, since this is the first project where you’re doing that? Is it nice to have a holistic view of audio and visuals?

Jess: Yeah, it’s pretty awesome. After figuring it out for Pussy Riot, I started using it in my own livestreams. I've also taught some masterclasses on it and done some consulting with friends who are doing livestream shows or music videos. I really love having it all in one place. If I had to do it the conventional way, I’d have to spend so much more money on a lighting console, and have room for the console, and learn how to operate it, and of course those are more sophisticated and more performative and that’s why LDs (lighting designers) can sit there and rock and busk a show.

I do start designing my shows by doing a busk performance using MIDI controllers that are mapped to all the different color envelopes necessary to talk to the lights, but I love having everything in one place, because from my software nerd perspective, I only have to run one program. I don’t have to have four things running on one laptop and then start worrying about the stability of those programs consuming resources etc. Then just for convenience of editing, I’m not sending a timecode somewhere that then can get out of sync. No, it’s just right here and if the video is wrong then I just move the MIDI note.

On the topic of the second computer running Resolume, how and why did you decide the best way to communicate with that computer was via MIDI over RTP?

Jess: Well for one thing, RTP can go wireless or wired. I knew I wanted wired, because wireless is fine, but I didn’t want there to be any chance of anybody hacking a wireless router, so that was one consideration. I also didn’t want any network lag to fuck it up. I knew it was going to be RTP MIDI because even though there are plugins, I prefer this way. It’s such a simple, low cost process to fire a MIDI note as opposed to having another plugin loaded.

I’m crazy organized about my Ableton playback sessions, because I’ve seen such catastrophic issues resulting from people adding plugins and not realizing how many resources they are consuming. I hardly even put on EQs or Auto Filter or anything. I really keep everything absolutely minimal. If I can edit and re-render something and just have Live doing one thing - playing .wav files and MIDI notes, then that’s a huge win. With both of those laptops living on stage while I’m all the way in the back of the crowd mixing FOH, if shit goes down, it’s going to be a few minutes to get it back up, and that is always the most stressful situation. So, I was like “yup, let’s just fire a MIDI note and that’s fine.”

After building this custom system for Pussy Riot, are there any alternative tools or workflows you’ve come across that you could recommend to others who might be new to lighting or visuals?

Jess: If anything, I’ve expanded my light programming more. I have more lights at my house than we would have taken on tour and I’ve added the MIDI controllers to it. I would suggest to anybody to utilise MIDI controller mapping. I have an Akai MPK and an APC-style controller. I basically have 8 knobs on one and 9 faders on the other, and I use that to busk the show out. I get a feel for it and I record that as I’m listening to the track. That’s what I did for the performance we just did (above). I wasn’t doing that before, but I would definitely recommend it because it’s much more accessible than trying to click around on nine different envelopes. That gets old very quickly.

Another cool thing that I figured out after programming their show was that if you’re in Session View, and more of an Ableton Live performer that uses Session View and you’re triggering samples and loops etc., you can also add little MIDI clips with envelopes into clip slots. So if you’re triggering samples, you could be triggering light at the same time, so you could play the lights as you’re playing your loops and one-shots and whatever you may be doing in Session View. You can also cross-map stuff. It’s all a question of sending the right envelope to the DMXIS box, and you could do that in any number of ways. It’s almost like sidechaining if you think about it, but you could create some wild effects just from taking that data from one place to another and handing it to the DMXIS box.

CLAUDE Synapse

CLAUDE is an audiovisual artist based in Seoul, South Korea. He creates digital organic and abstract generative objects and glitch sounds based on real-time data, in an attempt to break the boundaries of the audio and visual between the artist himself and the audience. Synapse is one of his recent audiovisual works that embodies images which appear abstractly in human memories and emotions, and in complex neural movements between organisms and cells.

What is your background in creating visuals for music? How did you end up using TouchDesigner for visuals?

I’ve always been interested in live performance. When I started making electronic music, I was looking for a way to express my work in a performance environment. I was artistic as a child, and I decided that I should create a video to be shown during my performances. While studying audio and visual performance (on my own - primarily via Ableton's YouTube channel and a lot of the official wiki and community of TouchDesigner), I went to a festival called Mutek Tokyo in 2017, and I found out that many artists I saw there were using TouchDesigner. Being able to create visuals that reacted to my music in real time was enough to get my attention, and I immediately started diving into the program.

What is Synapse about, and how do the audio and visuals reflect that?

Synapse is intended to make one rethink human memories, emotions, and the structure of life. The audio signal generated by Live is applied to the visuals in real time, to create the shapes and transformation of the objects being used.

You mentioned that your work incorporates generative objects based on real-time data. What kind of data are you using, and are you incorporating these techniques into both the audio and visuals?

I use real-time nature data found online, for example, on the climate and water levels of different countries. After analyzing and arranging the data in Excel, I send the assigned OSC messages to TouchDesigner. I can also send these OSC messages to Live. Then, real-time content control using data becomes possible and is applied to sound production algorithms and the visual production.

A still from Synapse that expresses the connection of cells and the complex nerves of humans in a landscape form

For live performance, do you have the visuals running on the same computer as Ableton Live?

I use a separate laptop for each. One for live music and another for live visuals. The two computers communicate via OSC using either WIFI or a LAN cable. There is no separate program used for OSC communication - just TouchDesigner's OSC In/Out and Max for Live.

It looks like you’re using the GrabberSender Max for Live device to communicate between TouchDesigner and Ableton Live via OSC. Can you explain why you are using this method to communicate between the two programs?

Personally, I feel that using OSC is more detailed and convenient than MIDI. By using LiveGrabber, almost all parameters and MIDI notes used in Live can be converted into OSC signals. This offers infinite possibilities when creating audiovisual works. As an artist, I use it because it’s a format that cannot limit my creative imagination.

After your performance of Synapse at Artechouse in Washington DC, you mentioned the importance of the venue space you’re performing in. What specific things made that space so perfect for you to perform Synapse? What would your ideal venue look like?

Artists have something they want to express to the audience through their work, and the venue is very important for that. Artechouse is a 360-degree immersive space, and it was a really great place to perform Synapse, because it is a work about human memories and the structure of life. You really have to be immersed in the work via the screen and audio and overwhelm the audience. Whether it is an immersive, large scale venue or a very small space, the space that can best express the artist’s vision is the most ideal place.

Do you have advice for musicians who want to explore the world of visuals for live performance but are just getting started?

Think about what you want to express first. The influence of music on us, and the content that can be conveyed, is limitless, but the moment we start using video in our work we can choose a more concrete focus for the work. It may be straightforward or abstract, but let’s start by thinking carefully about which parts will make the audience feel something.

Text and interviews: Angelica Tavella