Audio-reactive visuals: Interview with Synnack & 0xf8

Synnack is the artistic moniker of Clint Michael Sand, experimental electronic artist and creator and host of the user community for Max for Live on www.maxforlive.com. Starting in 2007, Sand teamed up with Jennifer McClain aka 0xf8 to create live visuals for Synnack performances, in which custom footage is remixed and manipulated in real time. We recently spoke to Clint and Jennifer about their unique, reactive audio-visual performance setup.

How did your collaboration with Jennifer come about? Had you previously done visual work yourself?

Clint: Previously the Synnack live shows had just started incorporating video but I was just using MIDI to trigger pre-rendered found videos. Jennifer and I started working together on this to turn those early ideas into something unique and dynamic. It's common for laptop performers to add a visual element to their setup to keep the audience focused, but simply combining VJ style video clips with music seemed cliche to me. I wanted to create something new where the visuals were just as live and dynamic as the music could be and much more intimately linked to sound and hence, more engaging to an audience.

What data are you sending to Jennifer from your set when performing Live? Do different parts in your set trigger different visual changes?

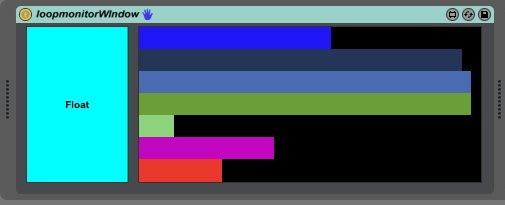

Clint: Every song I play has a different set of data that gets sent over in a different way. The kick drum, snare, and drum loop tracks send their amplitude as numerical values over OSC (Open Sound Protocol). Ambient clips send just their high-end frequency information. Along with these numbers that represent the audio, there are also a series of Max for Live devices that send information about Ableton Live itself: when the transport is started or stopped, when the first or last scene of a song is triggered, the name of the current track, when specific MIDI notes are played and so on. All of this gets routed by a Max patch on Jennifer's laptop to manipulate Quartz Composer compositions and video effects. You can read the details about how all this works on my blog at www.synnack.com/blog.

Above: demo video of Synnack visuals

How did you first approach working with Clint? Did you compose/record the visuals separately, or with his music in mind?

Jennifer: Initially, the visuals came after the music was produced. When listening to a Synnack song, I'll have a picture in my head of something I want to create. Once I produce it, I then determine what video effect parameters to expose to the incoming Max for Live data to achieve a specific look. Early 20th century Surrealist painters were known for playing a game they called "Exquisite Corpse" in which players alternate turns writing singles lines of text on a sheet of paper to create a literary work. We're moving toward a similar workflow that's much more collaborative where Clint will also draw inspiration from my video work to create music, just as I draw inspiration from his music to create visuals. For example, I might produce a visual that inspires him to author a sound, and then I iterate the visual based on the music he has created, and vice versa.

Can you walk us through what happens when you receive a UDP message from Clint? How does that interface with your live setup?

Jennifer: The OSC message is first received by a Max patch. Within this patch, I have the ability to set specific acceptable ranges for received values and scale the received data accordingly, as well as map it to any effect parameter. These parameters can be associated with effects on a specific video layer or the overall composition, or be mapped to values that control the Quartz Composer files I'm using within the set.

One of Clint's custom Max for Live devices for sending data

How much control do you have over the live visuals? How much control comes automatically from Clint? Can you choose to "ignore" messages from Clint?

Jennifer: I have a significant amount of manual control over the live visuals. I determine which clips actually get played and when, as well as the specific impact that the audio data has on the effects themselves through my ability to adjust the acceptable ranges for received values. The audio data determines the timing and the speed of the effect parameter changes, but I determine which effects are impacted and how much they are changed. I can choose to bypass messages from Clint, but more often, I select the content, manipulate effect parameters, and adjust the OSC input ranges to achieve a desired look.